Merge branch 'develop' into add-channels

This commit is contained in:

commit

9c830273da

6620 changed files with 174387 additions and 136546 deletions

|

|

@ -6,7 +6,7 @@

|

|||

* @author Jay Trees <github.jay@grandel.anonaddy.me>

|

||||

*/

|

||||

|

||||

define('VERSION', '0.4.0');

|

||||

define('VERSION', '0.5.0');

|

||||

define('ROOT', __DIR__);

|

||||

define('DEFAULT_LOCALE', 'en_GB');

|

||||

|

||||

|

|

|

|||

819

node_modules/.package-lock.json

generated

vendored

819

node_modules/.package-lock.json

generated

vendored

File diff suppressed because it is too large

Load diff

9

node_modules/@actions/core/LICENSE.md

generated

vendored

Normal file

9

node_modules/@actions/core/LICENSE.md

generated

vendored

Normal file

|

|

@ -0,0 +1,9 @@

|

|||

The MIT License (MIT)

|

||||

|

||||

Copyright 2019 GitHub

|

||||

|

||||

Permission is hereby granted, free of charge, to any person obtaining a copy of this software and associated documentation files (the "Software"), to deal in the Software without restriction, including without limitation the rights to use, copy, modify, merge, publish, distribute, sublicense, and/or sell copies of the Software, and to permit persons to whom the Software is furnished to do so, subject to the following conditions:

|

||||

|

||||

The above copyright notice and this permission notice shall be included in all copies or substantial portions of the Software.

|

||||

|

||||

THE SOFTWARE IS PROVIDED "AS IS", WITHOUT WARRANTY OF ANY KIND, EXPRESS OR IMPLIED, INCLUDING BUT NOT LIMITED TO THE WARRANTIES OF MERCHANTABILITY, FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT. IN NO EVENT SHALL THE AUTHORS OR COPYRIGHT HOLDERS BE LIABLE FOR ANY CLAIM, DAMAGES OR OTHER LIABILITY, WHETHER IN AN ACTION OF CONTRACT, TORT OR OTHERWISE, ARISING FROM, OUT OF OR IN CONNECTION WITH THE SOFTWARE OR THE USE OR OTHER DEALINGS IN THE SOFTWARE.

|

||||

312

node_modules/@actions/core/README.md

generated

vendored

Normal file

312

node_modules/@actions/core/README.md

generated

vendored

Normal file

|

|

@ -0,0 +1,312 @@

|

|||

# `@actions/core`

|

||||

|

||||

> Core functions for setting results, logging, registering secrets and exporting variables across actions

|

||||

|

||||

## Usage

|

||||

|

||||

### Import the package

|

||||

|

||||

```js

|

||||

// javascript

|

||||

const core = require('@actions/core');

|

||||

|

||||

// typescript

|

||||

import * as core from '@actions/core';

|

||||

```

|

||||

|

||||

#### Inputs/Outputs

|

||||

|

||||

Action inputs can be read with `getInput` which returns a `string` or `getBooleanInput` which parses a boolean based on the [yaml 1.2 specification](https://yaml.org/spec/1.2/spec.html#id2804923). If `required` set to be false, the input should have a default value in `action.yml`.

|

||||

|

||||

Outputs can be set with `setOutput` which makes them available to be mapped into inputs of other actions to ensure they are decoupled.

|

||||

|

||||

```js

|

||||

const myInput = core.getInput('inputName', { required: true });

|

||||

const myBooleanInput = core.getBooleanInput('booleanInputName', { required: true });

|

||||

const myMultilineInput = core.getMultilineInput('multilineInputName', { required: true });

|

||||

core.setOutput('outputKey', 'outputVal');

|

||||

```

|

||||

|

||||

#### Exporting variables

|

||||

|

||||

Since each step runs in a separate process, you can use `exportVariable` to add it to this step and future steps environment blocks.

|

||||

|

||||

```js

|

||||

core.exportVariable('envVar', 'Val');

|

||||

```

|

||||

|

||||

#### Setting a secret

|

||||

|

||||

Setting a secret registers the secret with the runner to ensure it is masked in logs.

|

||||

|

||||

```js

|

||||

core.setSecret('myPassword');

|

||||

```

|

||||

|

||||

#### PATH Manipulation

|

||||

|

||||

To make a tool's path available in the path for the remainder of the job (without altering the machine or containers state), use `addPath`. The runner will prepend the path given to the jobs PATH.

|

||||

|

||||

```js

|

||||

core.addPath('/path/to/mytool');

|

||||

```

|

||||

|

||||

#### Exit codes

|

||||

|

||||

You should use this library to set the failing exit code for your action. If status is not set and the script runs to completion, that will lead to a success.

|

||||

|

||||

```js

|

||||

const core = require('@actions/core');

|

||||

|

||||

try {

|

||||

// Do stuff

|

||||

}

|

||||

catch (err) {

|

||||

// setFailed logs the message and sets a failing exit code

|

||||

core.setFailed(`Action failed with error ${err}`);

|

||||

}

|

||||

```

|

||||

|

||||

Note that `setNeutral` is not yet implemented in actions V2 but equivalent functionality is being planned.

|

||||

|

||||

#### Logging

|

||||

|

||||

Finally, this library provides some utilities for logging. Note that debug logging is hidden from the logs by default. This behavior can be toggled by enabling the [Step Debug Logs](../../docs/action-debugging.md#step-debug-logs).

|

||||

|

||||

```js

|

||||

const core = require('@actions/core');

|

||||

|

||||

const myInput = core.getInput('input');

|

||||

try {

|

||||

core.debug('Inside try block');

|

||||

|

||||

if (!myInput) {

|

||||

core.warning('myInput was not set');

|

||||

}

|

||||

|

||||

if (core.isDebug()) {

|

||||

// curl -v https://github.com

|

||||

} else {

|

||||

// curl https://github.com

|

||||

}

|

||||

|

||||

// Do stuff

|

||||

core.info('Output to the actions build log')

|

||||

|

||||

core.notice('This is a message that will also emit an annotation')

|

||||

}

|

||||

catch (err) {

|

||||

core.error(`Error ${err}, action may still succeed though`);

|

||||

}

|

||||

```

|

||||

|

||||

This library can also wrap chunks of output in foldable groups.

|

||||

|

||||

```js

|

||||

const core = require('@actions/core')

|

||||

|

||||

// Manually wrap output

|

||||

core.startGroup('Do some function')

|

||||

doSomeFunction()

|

||||

core.endGroup()

|

||||

|

||||

// Wrap an asynchronous function call

|

||||

const result = await core.group('Do something async', async () => {

|

||||

const response = await doSomeHTTPRequest()

|

||||

return response

|

||||

})

|

||||

```

|

||||

|

||||

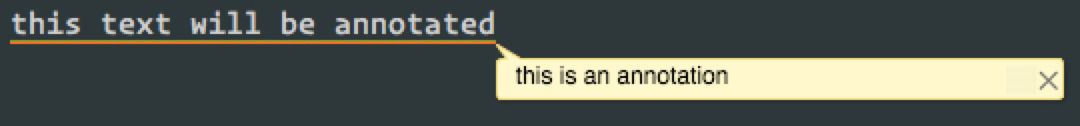

#### Annotations

|

||||

|

||||

This library has 3 methods that will produce [annotations](https://docs.github.com/en/rest/reference/checks#create-a-check-run).

|

||||

```js

|

||||

core.error('This is a bad error. This will also fail the build.')

|

||||

|

||||

core.warning('Something went wrong, but it\'s not bad enough to fail the build.')

|

||||

|

||||

core.notice('Something happened that you might want to know about.')

|

||||

```

|

||||

|

||||

These will surface to the UI in the Actions page and on Pull Requests. They look something like this:

|

||||

|

||||

|

||||

|

||||

These annotations can also be attached to particular lines and columns of your source files to show exactly where a problem is occuring.

|

||||

|

||||

These options are:

|

||||

```typescript

|

||||

export interface AnnotationProperties {

|

||||

/**

|

||||

* A title for the annotation.

|

||||

*/

|

||||

title?: string

|

||||

|

||||

/**

|

||||

* The name of the file for which the annotation should be created.

|

||||

*/

|

||||

file?: string

|

||||

|

||||

/**

|

||||

* The start line for the annotation.

|

||||

*/

|

||||

startLine?: number

|

||||

|

||||

/**

|

||||

* The end line for the annotation. Defaults to `startLine` when `startLine` is provided.

|

||||

*/

|

||||

endLine?: number

|

||||

|

||||

/**

|

||||

* The start column for the annotation. Cannot be sent when `startLine` and `endLine` are different values.

|

||||

*/

|

||||

startColumn?: number

|

||||

|

||||

/**

|

||||

* The start column for the annotation. Cannot be sent when `startLine` and `endLine` are different values.

|

||||

* Defaults to `startColumn` when `startColumn` is provided.

|

||||

*/

|

||||

endColumn?: number

|

||||

}

|

||||

```

|

||||

|

||||

#### Styling output

|

||||

|

||||

Colored output is supported in the Action logs via standard [ANSI escape codes](https://en.wikipedia.org/wiki/ANSI_escape_code). 3/4 bit, 8 bit and 24 bit colors are all supported.

|

||||

|

||||

Foreground colors:

|

||||

|

||||

```js

|

||||

// 3/4 bit

|

||||

core.info('\u001b[35mThis foreground will be magenta')

|

||||

|

||||

// 8 bit

|

||||

core.info('\u001b[38;5;6mThis foreground will be cyan')

|

||||

|

||||

// 24 bit

|

||||

core.info('\u001b[38;2;255;0;0mThis foreground will be bright red')

|

||||

```

|

||||

|

||||

Background colors:

|

||||

|

||||

```js

|

||||

// 3/4 bit

|

||||

core.info('\u001b[43mThis background will be yellow');

|

||||

|

||||

// 8 bit

|

||||

core.info('\u001b[48;5;6mThis background will be cyan')

|

||||

|

||||

// 24 bit

|

||||

core.info('\u001b[48;2;255;0;0mThis background will be bright red')

|

||||

```

|

||||

|

||||

Special styles:

|

||||

|

||||

```js

|

||||

core.info('\u001b[1mBold text')

|

||||

core.info('\u001b[3mItalic text')

|

||||

core.info('\u001b[4mUnderlined text')

|

||||

```

|

||||

|

||||

ANSI escape codes can be combined with one another:

|

||||

|

||||

```js

|

||||

core.info('\u001b[31;46mRed foreground with a cyan background and \u001b[1mbold text at the end');

|

||||

```

|

||||

|

||||

> Note: Escape codes reset at the start of each line

|

||||

|

||||

```js

|

||||

core.info('\u001b[35mThis foreground will be magenta')

|

||||

core.info('This foreground will reset to the default')

|

||||

```

|

||||

|

||||

Manually typing escape codes can be a little difficult, but you can use third party modules such as [ansi-styles](https://github.com/chalk/ansi-styles).

|

||||

|

||||

```js

|

||||

const style = require('ansi-styles');

|

||||

core.info(style.color.ansi16m.hex('#abcdef') + 'Hello world!')

|

||||

```

|

||||

|

||||

#### Action state

|

||||

|

||||

You can use this library to save state and get state for sharing information between a given wrapper action:

|

||||

|

||||

**action.yml**:

|

||||

|

||||

```yaml

|

||||

name: 'Wrapper action sample'

|

||||

inputs:

|

||||

name:

|

||||

default: 'GitHub'

|

||||

runs:

|

||||

using: 'node12'

|

||||

main: 'main.js'

|

||||

post: 'cleanup.js'

|

||||

```

|

||||

|

||||

In action's `main.js`:

|

||||

|

||||

```js

|

||||

const core = require('@actions/core');

|

||||

|

||||

core.saveState("pidToKill", 12345);

|

||||

```

|

||||

|

||||

In action's `cleanup.js`:

|

||||

|

||||

```js

|

||||

const core = require('@actions/core');

|

||||

|

||||

var pid = core.getState("pidToKill");

|

||||

|

||||

process.kill(pid);

|

||||

```

|

||||

|

||||

#### OIDC Token

|

||||

|

||||

You can use these methods to interact with the GitHub OIDC provider and get a JWT ID token which would help to get access token from third party cloud providers.

|

||||

|

||||

**Method Name**: getIDToken()

|

||||

|

||||

**Inputs**

|

||||

|

||||

audience : optional

|

||||

|

||||

**Outputs**

|

||||

|

||||

A [JWT](https://jwt.io/) ID Token

|

||||

|

||||

In action's `main.ts`:

|

||||

```js

|

||||

const core = require('@actions/core');

|

||||

async function getIDTokenAction(): Promise<void> {

|

||||

|

||||

const audience = core.getInput('audience', {required: false})

|

||||

|

||||

const id_token1 = await core.getIDToken() // ID Token with default audience

|

||||

const id_token2 = await core.getIDToken(audience) // ID token with custom audience

|

||||

|

||||

// this id_token can be used to get access token from third party cloud providers

|

||||

}

|

||||

getIDTokenAction()

|

||||

```

|

||||

|

||||

In action's `actions.yml`:

|

||||

|

||||

```yaml

|

||||

name: 'GetIDToken'

|

||||

description: 'Get ID token from Github OIDC provider'

|

||||

inputs:

|

||||

audience:

|

||||

description: 'Audience for which the ID token is intended for'

|

||||

required: false

|

||||

outputs:

|

||||

id_token1:

|

||||

description: 'ID token obtained from OIDC provider'

|

||||

id_token2:

|

||||

description: 'ID token obtained from OIDC provider'

|

||||

runs:

|

||||

using: 'node12'

|

||||

main: 'dist/index.js'

|

||||

```

|

||||

15

node_modules/@actions/core/lib/command.d.ts

generated

vendored

Normal file

15

node_modules/@actions/core/lib/command.d.ts

generated

vendored

Normal file

|

|

@ -0,0 +1,15 @@

|

|||

export interface CommandProperties {

|

||||

[key: string]: any;

|

||||

}

|

||||

/**

|

||||

* Commands

|

||||

*

|

||||

* Command Format:

|

||||

* ::name key=value,key=value::message

|

||||

*

|

||||

* Examples:

|

||||

* ::warning::This is the message

|

||||

* ::set-env name=MY_VAR::some value

|

||||

*/

|

||||

export declare function issueCommand(command: string, properties: CommandProperties, message: any): void;

|

||||

export declare function issue(name: string, message?: string): void;

|

||||

92

node_modules/@actions/core/lib/command.js

generated

vendored

Normal file

92

node_modules/@actions/core/lib/command.js

generated

vendored

Normal file

|

|

@ -0,0 +1,92 @@

|

|||

"use strict";

|

||||

var __createBinding = (this && this.__createBinding) || (Object.create ? (function(o, m, k, k2) {

|

||||

if (k2 === undefined) k2 = k;

|

||||

Object.defineProperty(o, k2, { enumerable: true, get: function() { return m[k]; } });

|

||||

}) : (function(o, m, k, k2) {

|

||||

if (k2 === undefined) k2 = k;

|

||||

o[k2] = m[k];

|

||||

}));

|

||||

var __setModuleDefault = (this && this.__setModuleDefault) || (Object.create ? (function(o, v) {

|

||||

Object.defineProperty(o, "default", { enumerable: true, value: v });

|

||||

}) : function(o, v) {

|

||||

o["default"] = v;

|

||||

});

|

||||

var __importStar = (this && this.__importStar) || function (mod) {

|

||||

if (mod && mod.__esModule) return mod;

|

||||

var result = {};

|

||||

if (mod != null) for (var k in mod) if (k !== "default" && Object.hasOwnProperty.call(mod, k)) __createBinding(result, mod, k);

|

||||

__setModuleDefault(result, mod);

|

||||

return result;

|

||||

};

|

||||

Object.defineProperty(exports, "__esModule", { value: true });

|

||||

exports.issue = exports.issueCommand = void 0;

|

||||

const os = __importStar(require("os"));

|

||||

const utils_1 = require("./utils");

|

||||

/**

|

||||

* Commands

|

||||

*

|

||||

* Command Format:

|

||||

* ::name key=value,key=value::message

|

||||

*

|

||||

* Examples:

|

||||

* ::warning::This is the message

|

||||

* ::set-env name=MY_VAR::some value

|

||||

*/

|

||||

function issueCommand(command, properties, message) {

|

||||

const cmd = new Command(command, properties, message);

|

||||

process.stdout.write(cmd.toString() + os.EOL);

|

||||

}

|

||||

exports.issueCommand = issueCommand;

|

||||

function issue(name, message = '') {

|

||||

issueCommand(name, {}, message);

|

||||

}

|

||||

exports.issue = issue;

|

||||

const CMD_STRING = '::';

|

||||

class Command {

|

||||

constructor(command, properties, message) {

|

||||

if (!command) {

|

||||

command = 'missing.command';

|

||||

}

|

||||

this.command = command;

|

||||

this.properties = properties;

|

||||

this.message = message;

|

||||

}

|

||||

toString() {

|

||||

let cmdStr = CMD_STRING + this.command;

|

||||

if (this.properties && Object.keys(this.properties).length > 0) {

|

||||

cmdStr += ' ';

|

||||

let first = true;

|

||||

for (const key in this.properties) {

|

||||

if (this.properties.hasOwnProperty(key)) {

|

||||

const val = this.properties[key];

|

||||

if (val) {

|

||||

if (first) {

|

||||

first = false;

|

||||

}

|

||||

else {

|

||||

cmdStr += ',';

|

||||

}

|

||||

cmdStr += `${key}=${escapeProperty(val)}`;

|

||||

}

|

||||

}

|

||||

}

|

||||

}

|

||||

cmdStr += `${CMD_STRING}${escapeData(this.message)}`;

|

||||

return cmdStr;

|

||||

}

|

||||

}

|

||||

function escapeData(s) {

|

||||

return utils_1.toCommandValue(s)

|

||||

.replace(/%/g, '%25')

|

||||

.replace(/\r/g, '%0D')

|

||||

.replace(/\n/g, '%0A');

|

||||

}

|

||||

function escapeProperty(s) {

|

||||

return utils_1.toCommandValue(s)

|

||||

.replace(/%/g, '%25')

|

||||

.replace(/\r/g, '%0D')

|

||||

.replace(/\n/g, '%0A')

|

||||

.replace(/:/g, '%3A')

|

||||

.replace(/,/g, '%2C');

|

||||

}

|

||||

//# sourceMappingURL=command.js.map

|

||||

1

node_modules/@actions/core/lib/command.js.map

generated

vendored

Normal file

1

node_modules/@actions/core/lib/command.js.map

generated

vendored

Normal file

|

|

@ -0,0 +1 @@

|

|||

{"version":3,"file":"command.js","sourceRoot":"","sources":["../src/command.ts"],"names":[],"mappings":";;;;;;;;;;;;;;;;;;;;;;AAAA,uCAAwB;AACxB,mCAAsC;AAWtC;;;;;;;;;GASG;AACH,SAAgB,YAAY,CAC1B,OAAe,EACf,UAA6B,EAC7B,OAAY;IAEZ,MAAM,GAAG,GAAG,IAAI,OAAO,CAAC,OAAO,EAAE,UAAU,EAAE,OAAO,CAAC,CAAA;IACrD,OAAO,CAAC,MAAM,CAAC,KAAK,CAAC,GAAG,CAAC,QAAQ,EAAE,GAAG,EAAE,CAAC,GAAG,CAAC,CAAA;AAC/C,CAAC;AAPD,oCAOC;AAED,SAAgB,KAAK,CAAC,IAAY,EAAE,OAAO,GAAG,EAAE;IAC9C,YAAY,CAAC,IAAI,EAAE,EAAE,EAAE,OAAO,CAAC,CAAA;AACjC,CAAC;AAFD,sBAEC;AAED,MAAM,UAAU,GAAG,IAAI,CAAA;AAEvB,MAAM,OAAO;IAKX,YAAY,OAAe,EAAE,UAA6B,EAAE,OAAe;QACzE,IAAI,CAAC,OAAO,EAAE;YACZ,OAAO,GAAG,iBAAiB,CAAA;SAC5B;QAED,IAAI,CAAC,OAAO,GAAG,OAAO,CAAA;QACtB,IAAI,CAAC,UAAU,GAAG,UAAU,CAAA;QAC5B,IAAI,CAAC,OAAO,GAAG,OAAO,CAAA;IACxB,CAAC;IAED,QAAQ;QACN,IAAI,MAAM,GAAG,UAAU,GAAG,IAAI,CAAC,OAAO,CAAA;QAEtC,IAAI,IAAI,CAAC,UAAU,IAAI,MAAM,CAAC,IAAI,CAAC,IAAI,CAAC,UAAU,CAAC,CAAC,MAAM,GAAG,CAAC,EAAE;YAC9D,MAAM,IAAI,GAAG,CAAA;YACb,IAAI,KAAK,GAAG,IAAI,CAAA;YAChB,KAAK,MAAM,GAAG,IAAI,IAAI,CAAC,UAAU,EAAE;gBACjC,IAAI,IAAI,CAAC,UAAU,CAAC,cAAc,CAAC,GAAG,CAAC,EAAE;oBACvC,MAAM,GAAG,GAAG,IAAI,CAAC,UAAU,CAAC,GAAG,CAAC,CAAA;oBAChC,IAAI,GAAG,EAAE;wBACP,IAAI,KAAK,EAAE;4BACT,KAAK,GAAG,KAAK,CAAA;yBACd;6BAAM;4BACL,MAAM,IAAI,GAAG,CAAA;yBACd;wBAED,MAAM,IAAI,GAAG,GAAG,IAAI,cAAc,CAAC,GAAG,CAAC,EAAE,CAAA;qBAC1C;iBACF;aACF;SACF;QAED,MAAM,IAAI,GAAG,UAAU,GAAG,UAAU,CAAC,IAAI,CAAC,OAAO,CAAC,EAAE,CAAA;QACpD,OAAO,MAAM,CAAA;IACf,CAAC;CACF;AAED,SAAS,UAAU,CAAC,CAAM;IACxB,OAAO,sBAAc,CAAC,CAAC,CAAC;SACrB,OAAO,CAAC,IAAI,EAAE,KAAK,CAAC;SACpB,OAAO,CAAC,KAAK,EAAE,KAAK,CAAC;SACrB,OAAO,CAAC,KAAK,EAAE,KAAK,CAAC,CAAA;AAC1B,CAAC;AAED,SAAS,cAAc,CAAC,CAAM;IAC5B,OAAO,sBAAc,CAAC,CAAC,CAAC;SACrB,OAAO,CAAC,IAAI,EAAE,KAAK,CAAC;SACpB,OAAO,CAAC,KAAK,EAAE,KAAK,CAAC;SACrB,OAAO,CAAC,KAAK,EAAE,KAAK,CAAC;SACrB,OAAO,CAAC,IAAI,EAAE,KAAK,CAAC;SACpB,OAAO,CAAC,IAAI,EAAE,KAAK,CAAC,CAAA;AACzB,CAAC"}

|

||||

186

node_modules/@actions/core/lib/core.d.ts

generated

vendored

Normal file

186

node_modules/@actions/core/lib/core.d.ts

generated

vendored

Normal file

|

|

@ -0,0 +1,186 @@

|

|||

/**

|

||||

* Interface for getInput options

|

||||

*/

|

||||

export interface InputOptions {

|

||||

/** Optional. Whether the input is required. If required and not present, will throw. Defaults to false */

|

||||

required?: boolean;

|

||||

/** Optional. Whether leading/trailing whitespace will be trimmed for the input. Defaults to true */

|

||||

trimWhitespace?: boolean;

|

||||

}

|

||||

/**

|

||||

* The code to exit an action

|

||||

*/

|

||||

export declare enum ExitCode {

|

||||

/**

|

||||

* A code indicating that the action was successful

|

||||

*/

|

||||

Success = 0,

|

||||

/**

|

||||

* A code indicating that the action was a failure

|

||||

*/

|

||||

Failure = 1

|

||||

}

|

||||

/**

|

||||

* Optional properties that can be sent with annotatation commands (notice, error, and warning)

|

||||

* See: https://docs.github.com/en/rest/reference/checks#create-a-check-run for more information about annotations.

|

||||

*/

|

||||

export interface AnnotationProperties {

|

||||

/**

|

||||

* A title for the annotation.

|

||||

*/

|

||||

title?: string;

|

||||

/**

|

||||

* The path of the file for which the annotation should be created.

|

||||

*/

|

||||

file?: string;

|

||||

/**

|

||||

* The start line for the annotation.

|

||||

*/

|

||||

startLine?: number;

|

||||

/**

|

||||

* The end line for the annotation. Defaults to `startLine` when `startLine` is provided.

|

||||

*/

|

||||

endLine?: number;

|

||||

/**

|

||||

* The start column for the annotation. Cannot be sent when `startLine` and `endLine` are different values.

|

||||

*/

|

||||

startColumn?: number;

|

||||

/**

|

||||

* The start column for the annotation. Cannot be sent when `startLine` and `endLine` are different values.

|

||||

* Defaults to `startColumn` when `startColumn` is provided.

|

||||

*/

|

||||

endColumn?: number;

|

||||

}

|

||||

/**

|

||||

* Sets env variable for this action and future actions in the job

|

||||

* @param name the name of the variable to set

|

||||

* @param val the value of the variable. Non-string values will be converted to a string via JSON.stringify

|

||||

*/

|

||||

export declare function exportVariable(name: string, val: any): void;

|

||||

/**

|

||||

* Registers a secret which will get masked from logs

|

||||

* @param secret value of the secret

|

||||

*/

|

||||

export declare function setSecret(secret: string): void;

|

||||

/**

|

||||

* Prepends inputPath to the PATH (for this action and future actions)

|

||||

* @param inputPath

|

||||

*/

|

||||

export declare function addPath(inputPath: string): void;

|

||||

/**

|

||||

* Gets the value of an input.

|

||||

* Unless trimWhitespace is set to false in InputOptions, the value is also trimmed.

|

||||

* Returns an empty string if the value is not defined.

|

||||

*

|

||||

* @param name name of the input to get

|

||||

* @param options optional. See InputOptions.

|

||||

* @returns string

|

||||

*/

|

||||

export declare function getInput(name: string, options?: InputOptions): string;

|

||||

/**

|

||||

* Gets the values of an multiline input. Each value is also trimmed.

|

||||

*

|

||||

* @param name name of the input to get

|

||||

* @param options optional. See InputOptions.

|

||||

* @returns string[]

|

||||

*

|

||||

*/

|

||||

export declare function getMultilineInput(name: string, options?: InputOptions): string[];

|

||||

/**

|

||||

* Gets the input value of the boolean type in the YAML 1.2 "core schema" specification.

|

||||

* Support boolean input list: `true | True | TRUE | false | False | FALSE` .

|

||||

* The return value is also in boolean type.

|

||||

* ref: https://yaml.org/spec/1.2/spec.html#id2804923

|

||||

*

|

||||

* @param name name of the input to get

|

||||

* @param options optional. See InputOptions.

|

||||

* @returns boolean

|

||||

*/

|

||||

export declare function getBooleanInput(name: string, options?: InputOptions): boolean;

|

||||

/**

|

||||

* Sets the value of an output.

|

||||

*

|

||||

* @param name name of the output to set

|

||||

* @param value value to store. Non-string values will be converted to a string via JSON.stringify

|

||||

*/

|

||||

export declare function setOutput(name: string, value: any): void;

|

||||

/**

|

||||

* Enables or disables the echoing of commands into stdout for the rest of the step.

|

||||

* Echoing is disabled by default if ACTIONS_STEP_DEBUG is not set.

|

||||

*

|

||||

*/

|

||||

export declare function setCommandEcho(enabled: boolean): void;

|

||||

/**

|

||||

* Sets the action status to failed.

|

||||

* When the action exits it will be with an exit code of 1

|

||||

* @param message add error issue message

|

||||

*/

|

||||

export declare function setFailed(message: string | Error): void;

|

||||

/**

|

||||

* Gets whether Actions Step Debug is on or not

|

||||

*/

|

||||

export declare function isDebug(): boolean;

|

||||

/**

|

||||

* Writes debug message to user log

|

||||

* @param message debug message

|

||||

*/

|

||||

export declare function debug(message: string): void;

|

||||

/**

|

||||

* Adds an error issue

|

||||

* @param message error issue message. Errors will be converted to string via toString()

|

||||

* @param properties optional properties to add to the annotation.

|

||||

*/

|

||||

export declare function error(message: string | Error, properties?: AnnotationProperties): void;

|

||||

/**

|

||||

* Adds a warning issue

|

||||

* @param message warning issue message. Errors will be converted to string via toString()

|

||||

* @param properties optional properties to add to the annotation.

|

||||

*/

|

||||

export declare function warning(message: string | Error, properties?: AnnotationProperties): void;

|

||||

/**

|

||||

* Adds a notice issue

|

||||

* @param message notice issue message. Errors will be converted to string via toString()

|

||||

* @param properties optional properties to add to the annotation.

|

||||

*/

|

||||

export declare function notice(message: string | Error, properties?: AnnotationProperties): void;

|

||||

/**

|

||||

* Writes info to log with console.log.

|

||||

* @param message info message

|

||||

*/

|

||||

export declare function info(message: string): void;

|

||||

/**

|

||||

* Begin an output group.

|

||||

*

|

||||

* Output until the next `groupEnd` will be foldable in this group

|

||||

*

|

||||

* @param name The name of the output group

|

||||

*/

|

||||

export declare function startGroup(name: string): void;

|

||||

/**

|

||||

* End an output group.

|

||||

*/

|

||||

export declare function endGroup(): void;

|

||||

/**

|

||||

* Wrap an asynchronous function call in a group.

|

||||

*

|

||||

* Returns the same type as the function itself.

|

||||

*

|

||||

* @param name The name of the group

|

||||

* @param fn The function to wrap in the group

|

||||

*/

|

||||

export declare function group<T>(name: string, fn: () => Promise<T>): Promise<T>;

|

||||

/**

|

||||

* Saves state for current action, the state can only be retrieved by this action's post job execution.

|

||||

*

|

||||

* @param name name of the state to store

|

||||

* @param value value to store. Non-string values will be converted to a string via JSON.stringify

|

||||

*/

|

||||

export declare function saveState(name: string, value: any): void;

|

||||

/**

|

||||

* Gets the value of an state set by this action's main execution.

|

||||

*

|

||||

* @param name name of the state to get

|

||||

* @returns string

|

||||

*/

|

||||

export declare function getState(name: string): string;

|

||||

export declare function getIDToken(aud?: string): Promise<string>;

|

||||

312

node_modules/@actions/core/lib/core.js

generated

vendored

Normal file

312

node_modules/@actions/core/lib/core.js

generated

vendored

Normal file

|

|

@ -0,0 +1,312 @@

|

|||

"use strict";

|

||||

var __createBinding = (this && this.__createBinding) || (Object.create ? (function(o, m, k, k2) {

|

||||

if (k2 === undefined) k2 = k;

|

||||

Object.defineProperty(o, k2, { enumerable: true, get: function() { return m[k]; } });

|

||||

}) : (function(o, m, k, k2) {

|

||||

if (k2 === undefined) k2 = k;

|

||||

o[k2] = m[k];

|

||||

}));

|

||||

var __setModuleDefault = (this && this.__setModuleDefault) || (Object.create ? (function(o, v) {

|

||||

Object.defineProperty(o, "default", { enumerable: true, value: v });

|

||||

}) : function(o, v) {

|

||||

o["default"] = v;

|

||||

});

|

||||

var __importStar = (this && this.__importStar) || function (mod) {

|

||||

if (mod && mod.__esModule) return mod;

|

||||

var result = {};

|

||||

if (mod != null) for (var k in mod) if (k !== "default" && Object.hasOwnProperty.call(mod, k)) __createBinding(result, mod, k);

|

||||

__setModuleDefault(result, mod);

|

||||

return result;

|

||||

};

|

||||

var __awaiter = (this && this.__awaiter) || function (thisArg, _arguments, P, generator) {

|

||||

function adopt(value) { return value instanceof P ? value : new P(function (resolve) { resolve(value); }); }

|

||||

return new (P || (P = Promise))(function (resolve, reject) {

|

||||

function fulfilled(value) { try { step(generator.next(value)); } catch (e) { reject(e); } }

|

||||

function rejected(value) { try { step(generator["throw"](value)); } catch (e) { reject(e); } }

|

||||

function step(result) { result.done ? resolve(result.value) : adopt(result.value).then(fulfilled, rejected); }

|

||||

step((generator = generator.apply(thisArg, _arguments || [])).next());

|

||||

});

|

||||

};

|

||||

Object.defineProperty(exports, "__esModule", { value: true });

|

||||

exports.getIDToken = exports.getState = exports.saveState = exports.group = exports.endGroup = exports.startGroup = exports.info = exports.notice = exports.warning = exports.error = exports.debug = exports.isDebug = exports.setFailed = exports.setCommandEcho = exports.setOutput = exports.getBooleanInput = exports.getMultilineInput = exports.getInput = exports.addPath = exports.setSecret = exports.exportVariable = exports.ExitCode = void 0;

|

||||

const command_1 = require("./command");

|

||||

const file_command_1 = require("./file-command");

|

||||

const utils_1 = require("./utils");

|

||||

const os = __importStar(require("os"));

|

||||

const path = __importStar(require("path"));

|

||||

const oidc_utils_1 = require("./oidc-utils");

|

||||

/**

|

||||

* The code to exit an action

|

||||

*/

|

||||

var ExitCode;

|

||||

(function (ExitCode) {

|

||||

/**

|

||||

* A code indicating that the action was successful

|

||||

*/

|

||||

ExitCode[ExitCode["Success"] = 0] = "Success";

|

||||

/**

|

||||

* A code indicating that the action was a failure

|

||||

*/

|

||||

ExitCode[ExitCode["Failure"] = 1] = "Failure";

|

||||

})(ExitCode = exports.ExitCode || (exports.ExitCode = {}));

|

||||

//-----------------------------------------------------------------------

|

||||

// Variables

|

||||

//-----------------------------------------------------------------------

|

||||

/**

|

||||

* Sets env variable for this action and future actions in the job

|

||||

* @param name the name of the variable to set

|

||||

* @param val the value of the variable. Non-string values will be converted to a string via JSON.stringify

|

||||

*/

|

||||

// eslint-disable-next-line @typescript-eslint/no-explicit-any

|

||||

function exportVariable(name, val) {

|

||||

const convertedVal = utils_1.toCommandValue(val);

|

||||

process.env[name] = convertedVal;

|

||||

const filePath = process.env['GITHUB_ENV'] || '';

|

||||

if (filePath) {

|

||||

const delimiter = '_GitHubActionsFileCommandDelimeter_';

|

||||

const commandValue = `${name}<<${delimiter}${os.EOL}${convertedVal}${os.EOL}${delimiter}`;

|

||||

file_command_1.issueCommand('ENV', commandValue);

|

||||

}

|

||||

else {

|

||||

command_1.issueCommand('set-env', { name }, convertedVal);

|

||||

}

|

||||

}

|

||||

exports.exportVariable = exportVariable;

|

||||

/**

|

||||

* Registers a secret which will get masked from logs

|

||||

* @param secret value of the secret

|

||||

*/

|

||||

function setSecret(secret) {

|

||||

command_1.issueCommand('add-mask', {}, secret);

|

||||

}

|

||||

exports.setSecret = setSecret;

|

||||

/**

|

||||

* Prepends inputPath to the PATH (for this action and future actions)

|

||||

* @param inputPath

|

||||

*/

|

||||

function addPath(inputPath) {

|

||||

const filePath = process.env['GITHUB_PATH'] || '';

|

||||

if (filePath) {

|

||||

file_command_1.issueCommand('PATH', inputPath);

|

||||

}

|

||||

else {

|

||||

command_1.issueCommand('add-path', {}, inputPath);

|

||||

}

|

||||

process.env['PATH'] = `${inputPath}${path.delimiter}${process.env['PATH']}`;

|

||||

}

|

||||

exports.addPath = addPath;

|

||||

/**

|

||||

* Gets the value of an input.

|

||||

* Unless trimWhitespace is set to false in InputOptions, the value is also trimmed.

|

||||

* Returns an empty string if the value is not defined.

|

||||

*

|

||||

* @param name name of the input to get

|

||||

* @param options optional. See InputOptions.

|

||||

* @returns string

|

||||

*/

|

||||

function getInput(name, options) {

|

||||

const val = process.env[`INPUT_${name.replace(/ /g, '_').toUpperCase()}`] || '';

|

||||

if (options && options.required && !val) {

|

||||

throw new Error(`Input required and not supplied: ${name}`);

|

||||

}

|

||||

if (options && options.trimWhitespace === false) {

|

||||

return val;

|

||||

}

|

||||

return val.trim();

|

||||

}

|

||||

exports.getInput = getInput;

|

||||

/**

|

||||

* Gets the values of an multiline input. Each value is also trimmed.

|

||||

*

|

||||

* @param name name of the input to get

|

||||

* @param options optional. See InputOptions.

|

||||

* @returns string[]

|

||||

*

|

||||

*/

|

||||

function getMultilineInput(name, options) {

|

||||

const inputs = getInput(name, options)

|

||||

.split('\n')

|

||||

.filter(x => x !== '');

|

||||

return inputs;

|

||||

}

|

||||

exports.getMultilineInput = getMultilineInput;

|

||||

/**

|

||||

* Gets the input value of the boolean type in the YAML 1.2 "core schema" specification.

|

||||

* Support boolean input list: `true | True | TRUE | false | False | FALSE` .

|

||||

* The return value is also in boolean type.

|

||||

* ref: https://yaml.org/spec/1.2/spec.html#id2804923

|

||||

*

|

||||

* @param name name of the input to get

|

||||

* @param options optional. See InputOptions.

|

||||

* @returns boolean

|

||||

*/

|

||||

function getBooleanInput(name, options) {

|

||||

const trueValue = ['true', 'True', 'TRUE'];

|

||||

const falseValue = ['false', 'False', 'FALSE'];

|

||||

const val = getInput(name, options);

|

||||

if (trueValue.includes(val))

|

||||

return true;

|

||||

if (falseValue.includes(val))

|

||||

return false;

|

||||

throw new TypeError(`Input does not meet YAML 1.2 "Core Schema" specification: ${name}\n` +

|

||||

`Support boolean input list: \`true | True | TRUE | false | False | FALSE\``);

|

||||

}

|

||||

exports.getBooleanInput = getBooleanInput;

|

||||

/**

|

||||

* Sets the value of an output.

|

||||

*

|

||||

* @param name name of the output to set

|

||||

* @param value value to store. Non-string values will be converted to a string via JSON.stringify

|

||||

*/

|

||||

// eslint-disable-next-line @typescript-eslint/no-explicit-any

|

||||

function setOutput(name, value) {

|

||||

process.stdout.write(os.EOL);

|

||||

command_1.issueCommand('set-output', { name }, value);

|

||||

}

|

||||

exports.setOutput = setOutput;

|

||||

/**

|

||||

* Enables or disables the echoing of commands into stdout for the rest of the step.

|

||||

* Echoing is disabled by default if ACTIONS_STEP_DEBUG is not set.

|

||||

*

|

||||

*/

|

||||

function setCommandEcho(enabled) {

|

||||

command_1.issue('echo', enabled ? 'on' : 'off');

|

||||

}

|

||||

exports.setCommandEcho = setCommandEcho;

|

||||

//-----------------------------------------------------------------------

|

||||

// Results

|

||||

//-----------------------------------------------------------------------

|

||||

/**

|

||||

* Sets the action status to failed.

|

||||

* When the action exits it will be with an exit code of 1

|

||||

* @param message add error issue message

|

||||

*/

|

||||

function setFailed(message) {

|

||||

process.exitCode = ExitCode.Failure;

|

||||

error(message);

|

||||

}

|

||||

exports.setFailed = setFailed;

|

||||

//-----------------------------------------------------------------------

|

||||

// Logging Commands

|

||||

//-----------------------------------------------------------------------

|

||||

/**

|

||||

* Gets whether Actions Step Debug is on or not

|

||||

*/

|

||||

function isDebug() {

|

||||

return process.env['RUNNER_DEBUG'] === '1';

|

||||

}

|

||||

exports.isDebug = isDebug;

|

||||

/**

|

||||

* Writes debug message to user log

|

||||

* @param message debug message

|

||||

*/

|

||||

function debug(message) {

|

||||

command_1.issueCommand('debug', {}, message);

|

||||

}

|

||||

exports.debug = debug;

|

||||

/**

|

||||

* Adds an error issue

|

||||

* @param message error issue message. Errors will be converted to string via toString()

|

||||

* @param properties optional properties to add to the annotation.

|

||||

*/

|

||||

function error(message, properties = {}) {

|

||||

command_1.issueCommand('error', utils_1.toCommandProperties(properties), message instanceof Error ? message.toString() : message);

|

||||

}

|

||||

exports.error = error;

|

||||

/**

|

||||

* Adds a warning issue

|

||||

* @param message warning issue message. Errors will be converted to string via toString()

|

||||

* @param properties optional properties to add to the annotation.

|

||||

*/

|

||||

function warning(message, properties = {}) {

|

||||

command_1.issueCommand('warning', utils_1.toCommandProperties(properties), message instanceof Error ? message.toString() : message);

|

||||

}

|

||||

exports.warning = warning;

|

||||

/**

|

||||

* Adds a notice issue

|

||||

* @param message notice issue message. Errors will be converted to string via toString()

|

||||

* @param properties optional properties to add to the annotation.

|

||||

*/

|

||||

function notice(message, properties = {}) {

|

||||

command_1.issueCommand('notice', utils_1.toCommandProperties(properties), message instanceof Error ? message.toString() : message);

|

||||

}

|

||||

exports.notice = notice;

|

||||

/**

|

||||

* Writes info to log with console.log.

|

||||

* @param message info message

|

||||

*/

|

||||

function info(message) {

|

||||

process.stdout.write(message + os.EOL);

|

||||

}

|

||||

exports.info = info;

|

||||

/**

|

||||

* Begin an output group.

|

||||

*

|

||||

* Output until the next `groupEnd` will be foldable in this group

|

||||

*

|

||||

* @param name The name of the output group

|

||||

*/

|

||||

function startGroup(name) {

|

||||

command_1.issue('group', name);

|

||||

}

|

||||

exports.startGroup = startGroup;

|

||||

/**

|

||||

* End an output group.

|

||||

*/

|

||||

function endGroup() {

|

||||

command_1.issue('endgroup');

|

||||

}

|

||||

exports.endGroup = endGroup;

|

||||

/**

|

||||

* Wrap an asynchronous function call in a group.

|

||||

*

|

||||

* Returns the same type as the function itself.

|

||||

*

|

||||

* @param name The name of the group

|

||||

* @param fn The function to wrap in the group

|

||||

*/

|

||||

function group(name, fn) {

|

||||

return __awaiter(this, void 0, void 0, function* () {

|

||||

startGroup(name);

|

||||

let result;

|

||||

try {

|

||||

result = yield fn();

|

||||

}

|

||||

finally {

|

||||

endGroup();

|

||||

}

|

||||

return result;

|

||||

});

|

||||

}

|

||||

exports.group = group;

|

||||

//-----------------------------------------------------------------------

|

||||

// Wrapper action state

|

||||

//-----------------------------------------------------------------------

|

||||

/**

|

||||

* Saves state for current action, the state can only be retrieved by this action's post job execution.

|

||||

*

|

||||

* @param name name of the state to store

|

||||

* @param value value to store. Non-string values will be converted to a string via JSON.stringify

|

||||

*/

|

||||

// eslint-disable-next-line @typescript-eslint/no-explicit-any

|

||||

function saveState(name, value) {

|

||||

command_1.issueCommand('save-state', { name }, value);

|

||||

}

|

||||

exports.saveState = saveState;

|

||||

/**

|

||||

* Gets the value of an state set by this action's main execution.

|

||||

*

|

||||

* @param name name of the state to get

|

||||

* @returns string

|

||||

*/

|

||||

function getState(name) {

|

||||

return process.env[`STATE_${name}`] || '';

|

||||

}

|

||||

exports.getState = getState;

|

||||

function getIDToken(aud) {

|

||||

return __awaiter(this, void 0, void 0, function* () {

|

||||

return yield oidc_utils_1.OidcClient.getIDToken(aud);

|

||||

});

|

||||

}

|

||||

exports.getIDToken = getIDToken;

|

||||

//# sourceMappingURL=core.js.map

|

||||

1

node_modules/@actions/core/lib/core.js.map

generated

vendored

Normal file

1

node_modules/@actions/core/lib/core.js.map

generated

vendored

Normal file

|

|

@ -0,0 +1 @@

|

|||

{"version":3,"file":"core.js","sourceRoot":"","sources":["../src/core.ts"],"names":[],"mappings":";;;;;;;;;;;;;;;;;;;;;;;;;;;;;;;AAAA,uCAA6C;AAC7C,iDAA+D;AAC/D,mCAA2D;AAE3D,uCAAwB;AACxB,2CAA4B;AAE5B,6CAAuC;AAavC;;GAEG;AACH,IAAY,QAUX;AAVD,WAAY,QAAQ;IAClB;;OAEG;IACH,6CAAW,CAAA;IAEX;;OAEG;IACH,6CAAW,CAAA;AACb,CAAC,EAVW,QAAQ,GAAR,gBAAQ,KAAR,gBAAQ,QAUnB;AAuCD,yEAAyE;AACzE,YAAY;AACZ,yEAAyE;AAEzE;;;;GAIG;AACH,8DAA8D;AAC9D,SAAgB,cAAc,CAAC,IAAY,EAAE,GAAQ;IACnD,MAAM,YAAY,GAAG,sBAAc,CAAC,GAAG,CAAC,CAAA;IACxC,OAAO,CAAC,GAAG,CAAC,IAAI,CAAC,GAAG,YAAY,CAAA;IAEhC,MAAM,QAAQ,GAAG,OAAO,CAAC,GAAG,CAAC,YAAY,CAAC,IAAI,EAAE,CAAA;IAChD,IAAI,QAAQ,EAAE;QACZ,MAAM,SAAS,GAAG,qCAAqC,CAAA;QACvD,MAAM,YAAY,GAAG,GAAG,IAAI,KAAK,SAAS,GAAG,EAAE,CAAC,GAAG,GAAG,YAAY,GAAG,EAAE,CAAC,GAAG,GAAG,SAAS,EAAE,CAAA;QACzF,2BAAgB,CAAC,KAAK,EAAE,YAAY,CAAC,CAAA;KACtC;SAAM;QACL,sBAAY,CAAC,SAAS,EAAE,EAAC,IAAI,EAAC,EAAE,YAAY,CAAC,CAAA;KAC9C;AACH,CAAC;AAZD,wCAYC;AAED;;;GAGG;AACH,SAAgB,SAAS,CAAC,MAAc;IACtC,sBAAY,CAAC,UAAU,EAAE,EAAE,EAAE,MAAM,CAAC,CAAA;AACtC,CAAC;AAFD,8BAEC;AAED;;;GAGG;AACH,SAAgB,OAAO,CAAC,SAAiB;IACvC,MAAM,QAAQ,GAAG,OAAO,CAAC,GAAG,CAAC,aAAa,CAAC,IAAI,EAAE,CAAA;IACjD,IAAI,QAAQ,EAAE;QACZ,2BAAgB,CAAC,MAAM,EAAE,SAAS,CAAC,CAAA;KACpC;SAAM;QACL,sBAAY,CAAC,UAAU,EAAE,EAAE,EAAE,SAAS,CAAC,CAAA;KACxC;IACD,OAAO,CAAC,GAAG,CAAC,MAAM,CAAC,GAAG,GAAG,SAAS,GAAG,IAAI,CAAC,SAAS,GAAG,OAAO,CAAC,GAAG,CAAC,MAAM,CAAC,EAAE,CAAA;AAC7E,CAAC;AARD,0BAQC;AAED;;;;;;;;GAQG;AACH,SAAgB,QAAQ,CAAC,IAAY,EAAE,OAAsB;IAC3D,MAAM,GAAG,GACP,OAAO,CAAC,GAAG,CAAC,SAAS,IAAI,CAAC,OAAO,CAAC,IAAI,EAAE,GAAG,CAAC,CAAC,WAAW,EAAE,EAAE,CAAC,IAAI,EAAE,CAAA;IACrE,IAAI,OAAO,IAAI,OAAO,CAAC,QAAQ,IAAI,CAAC,GAAG,EAAE;QACvC,MAAM,IAAI,KAAK,CAAC,oCAAoC,IAAI,EAAE,CAAC,CAAA;KAC5D;IAED,IAAI,OAAO,IAAI,OAAO,CAAC,cAAc,KAAK,KAAK,EAAE;QAC/C,OAAO,GAAG,CAAA;KACX;IAED,OAAO,GAAG,CAAC,IAAI,EAAE,CAAA;AACnB,CAAC;AAZD,4BAYC;AAED;;;;;;;GAOG;AACH,SAAgB,iBAAiB,CAC/B,IAAY,EACZ,OAAsB;IAEtB,MAAM,MAAM,GAAa,QAAQ,CAAC,IAAI,EAAE,OAAO,CAAC;SAC7C,KAAK,CAAC,IAAI,CAAC;SACX,MAAM,CAAC,CAAC,CAAC,EAAE,CAAC,CAAC,KAAK,EAAE,CAAC,CAAA;IAExB,OAAO,MAAM,CAAA;AACf,CAAC;AATD,8CASC;AAED;;;;;;;;;GASG;AACH,SAAgB,eAAe,CAAC,IAAY,EAAE,OAAsB;IAClE,MAAM,SAAS,GAAG,CAAC,MAAM,EAAE,MAAM,EAAE,MAAM,CAAC,CAAA;IAC1C,MAAM,UAAU,GAAG,CAAC,OAAO,EAAE,OAAO,EAAE,OAAO,CAAC,CAAA;IAC9C,MAAM,GAAG,GAAG,QAAQ,CAAC,IAAI,EAAE,OAAO,CAAC,CAAA;IACnC,IAAI,SAAS,CAAC,QAAQ,CAAC,GAAG,CAAC;QAAE,OAAO,IAAI,CAAA;IACxC,IAAI,UAAU,CAAC,QAAQ,CAAC,GAAG,CAAC;QAAE,OAAO,KAAK,CAAA;IAC1C,MAAM,IAAI,SAAS,CACjB,6DAA6D,IAAI,IAAI;QACnE,4EAA4E,CAC/E,CAAA;AACH,CAAC;AAVD,0CAUC;AAED;;;;;GAKG;AACH,8DAA8D;AAC9D,SAAgB,SAAS,CAAC,IAAY,EAAE,KAAU;IAChD,OAAO,CAAC,MAAM,CAAC,KAAK,CAAC,EAAE,CAAC,GAAG,CAAC,CAAA;IAC5B,sBAAY,CAAC,YAAY,EAAE,EAAC,IAAI,EAAC,EAAE,KAAK,CAAC,CAAA;AAC3C,CAAC;AAHD,8BAGC;AAED;;;;GAIG;AACH,SAAgB,cAAc,CAAC,OAAgB;IAC7C,eAAK,CAAC,MAAM,EAAE,OAAO,CAAC,CAAC,CAAC,IAAI,CAAC,CAAC,CAAC,KAAK,CAAC,CAAA;AACvC,CAAC;AAFD,wCAEC;AAED,yEAAyE;AACzE,UAAU;AACV,yEAAyE;AAEzE;;;;GAIG;AACH,SAAgB,SAAS,CAAC,OAAuB;IAC/C,OAAO,CAAC,QAAQ,GAAG,QAAQ,CAAC,OAAO,CAAA;IAEnC,KAAK,CAAC,OAAO,CAAC,CAAA;AAChB,CAAC;AAJD,8BAIC;AAED,yEAAyE;AACzE,mBAAmB;AACnB,yEAAyE;AAEzE;;GAEG;AACH,SAAgB,OAAO;IACrB,OAAO,OAAO,CAAC,GAAG,CAAC,cAAc,CAAC,KAAK,GAAG,CAAA;AAC5C,CAAC;AAFD,0BAEC;AAED;;;GAGG;AACH,SAAgB,KAAK,CAAC,OAAe;IACnC,sBAAY,CAAC,OAAO,EAAE,EAAE,EAAE,OAAO,CAAC,CAAA;AACpC,CAAC;AAFD,sBAEC;AAED;;;;GAIG;AACH,SAAgB,KAAK,CACnB,OAAuB,EACvB,aAAmC,EAAE;IAErC,sBAAY,CACV,OAAO,EACP,2BAAmB,CAAC,UAAU,CAAC,EAC/B,OAAO,YAAY,KAAK,CAAC,CAAC,CAAC,OAAO,CAAC,QAAQ,EAAE,CAAC,CAAC,CAAC,OAAO,CACxD,CAAA;AACH,CAAC;AATD,sBASC;AAED;;;;GAIG;AACH,SAAgB,OAAO,CACrB,OAAuB,EACvB,aAAmC,EAAE;IAErC,sBAAY,CACV,SAAS,EACT,2BAAmB,CAAC,UAAU,CAAC,EAC/B,OAAO,YAAY,KAAK,CAAC,CAAC,CAAC,OAAO,CAAC,QAAQ,EAAE,CAAC,CAAC,CAAC,OAAO,CACxD,CAAA;AACH,CAAC;AATD,0BASC;AAED;;;;GAIG;AACH,SAAgB,MAAM,CACpB,OAAuB,EACvB,aAAmC,EAAE;IAErC,sBAAY,CACV,QAAQ,EACR,2BAAmB,CAAC,UAAU,CAAC,EAC/B,OAAO,YAAY,KAAK,CAAC,CAAC,CAAC,OAAO,CAAC,QAAQ,EAAE,CAAC,CAAC,CAAC,OAAO,CACxD,CAAA;AACH,CAAC;AATD,wBASC;AAED;;;GAGG;AACH,SAAgB,IAAI,CAAC,OAAe;IAClC,OAAO,CAAC,MAAM,CAAC,KAAK,CAAC,OAAO,GAAG,EAAE,CAAC,GAAG,CAAC,CAAA;AACxC,CAAC;AAFD,oBAEC;AAED;;;;;;GAMG;AACH,SAAgB,UAAU,CAAC,IAAY;IACrC,eAAK,CAAC,OAAO,EAAE,IAAI,CAAC,CAAA;AACtB,CAAC;AAFD,gCAEC;AAED;;GAEG;AACH,SAAgB,QAAQ;IACtB,eAAK,CAAC,UAAU,CAAC,CAAA;AACnB,CAAC;AAFD,4BAEC;AAED;;;;;;;GAOG;AACH,SAAsB,KAAK,CAAI,IAAY,EAAE,EAAoB;;QAC/D,UAAU,CAAC,IAAI,CAAC,CAAA;QAEhB,IAAI,MAAS,CAAA;QAEb,IAAI;YACF,MAAM,GAAG,MAAM,EAAE,EAAE,CAAA;SACpB;gBAAS;YACR,QAAQ,EAAE,CAAA;SACX;QAED,OAAO,MAAM,CAAA;IACf,CAAC;CAAA;AAZD,sBAYC;AAED,yEAAyE;AACzE,uBAAuB;AACvB,yEAAyE;AAEzE;;;;;GAKG;AACH,8DAA8D;AAC9D,SAAgB,SAAS,CAAC,IAAY,EAAE,KAAU;IAChD,sBAAY,CAAC,YAAY,EAAE,EAAC,IAAI,EAAC,EAAE,KAAK,CAAC,CAAA;AAC3C,CAAC;AAFD,8BAEC;AAED;;;;;GAKG;AACH,SAAgB,QAAQ,CAAC,IAAY;IACnC,OAAO,OAAO,CAAC,GAAG,CAAC,SAAS,IAAI,EAAE,CAAC,IAAI,EAAE,CAAA;AAC3C,CAAC;AAFD,4BAEC;AAED,SAAsB,UAAU,CAAC,GAAY;;QAC3C,OAAO,MAAM,uBAAU,CAAC,UAAU,CAAC,GAAG,CAAC,CAAA;IACzC,CAAC;CAAA;AAFD,gCAEC"}

|

||||

1

node_modules/@actions/core/lib/file-command.d.ts

generated

vendored

Normal file

1

node_modules/@actions/core/lib/file-command.d.ts

generated

vendored

Normal file

|

|

@ -0,0 +1 @@

|

|||

export declare function issueCommand(command: string, message: any): void;

|

||||

42

node_modules/@actions/core/lib/file-command.js

generated

vendored

Normal file

42

node_modules/@actions/core/lib/file-command.js

generated

vendored

Normal file

|

|

@ -0,0 +1,42 @@

|

|||

"use strict";

|

||||

// For internal use, subject to change.

|

||||

var __createBinding = (this && this.__createBinding) || (Object.create ? (function(o, m, k, k2) {

|

||||

if (k2 === undefined) k2 = k;

|

||||

Object.defineProperty(o, k2, { enumerable: true, get: function() { return m[k]; } });

|

||||

}) : (function(o, m, k, k2) {

|

||||

if (k2 === undefined) k2 = k;

|

||||

o[k2] = m[k];

|

||||

}));

|

||||

var __setModuleDefault = (this && this.__setModuleDefault) || (Object.create ? (function(o, v) {

|

||||

Object.defineProperty(o, "default", { enumerable: true, value: v });

|

||||

}) : function(o, v) {

|

||||

o["default"] = v;

|

||||

});

|

||||

var __importStar = (this && this.__importStar) || function (mod) {

|

||||

if (mod && mod.__esModule) return mod;

|

||||

var result = {};

|

||||

if (mod != null) for (var k in mod) if (k !== "default" && Object.hasOwnProperty.call(mod, k)) __createBinding(result, mod, k);

|

||||

__setModuleDefault(result, mod);

|

||||

return result;

|

||||

};

|

||||

Object.defineProperty(exports, "__esModule", { value: true });

|

||||

exports.issueCommand = void 0;

|

||||

// We use any as a valid input type

|

||||

/* eslint-disable @typescript-eslint/no-explicit-any */

|

||||

const fs = __importStar(require("fs"));

|

||||

const os = __importStar(require("os"));

|

||||

const utils_1 = require("./utils");

|

||||

function issueCommand(command, message) {

|

||||

const filePath = process.env[`GITHUB_${command}`];

|

||||

if (!filePath) {

|

||||

throw new Error(`Unable to find environment variable for file command ${command}`);

|

||||

}

|

||||

if (!fs.existsSync(filePath)) {

|

||||

throw new Error(`Missing file at path: ${filePath}`);

|

||||

}

|

||||

fs.appendFileSync(filePath, `${utils_1.toCommandValue(message)}${os.EOL}`, {

|

||||

encoding: 'utf8'

|

||||

});

|

||||

}

|

||||

exports.issueCommand = issueCommand;

|

||||

//# sourceMappingURL=file-command.js.map

|

||||

1

node_modules/@actions/core/lib/file-command.js.map

generated

vendored

Normal file

1

node_modules/@actions/core/lib/file-command.js.map

generated

vendored

Normal file

|

|

@ -0,0 +1 @@

|

|||

{"version":3,"file":"file-command.js","sourceRoot":"","sources":["../src/file-command.ts"],"names":[],"mappings":";AAAA,uCAAuC;;;;;;;;;;;;;;;;;;;;;;AAEvC,mCAAmC;AACnC,uDAAuD;AAEvD,uCAAwB;AACxB,uCAAwB;AACxB,mCAAsC;AAEtC,SAAgB,YAAY,CAAC,OAAe,EAAE,OAAY;IACxD,MAAM,QAAQ,GAAG,OAAO,CAAC,GAAG,CAAC,UAAU,OAAO,EAAE,CAAC,CAAA;IACjD,IAAI,CAAC,QAAQ,EAAE;QACb,MAAM,IAAI,KAAK,CACb,wDAAwD,OAAO,EAAE,CAClE,CAAA;KACF;IACD,IAAI,CAAC,EAAE,CAAC,UAAU,CAAC,QAAQ,CAAC,EAAE;QAC5B,MAAM,IAAI,KAAK,CAAC,yBAAyB,QAAQ,EAAE,CAAC,CAAA;KACrD;IAED,EAAE,CAAC,cAAc,CAAC,QAAQ,EAAE,GAAG,sBAAc,CAAC,OAAO,CAAC,GAAG,EAAE,CAAC,GAAG,EAAE,EAAE;QACjE,QAAQ,EAAE,MAAM;KACjB,CAAC,CAAA;AACJ,CAAC;AAdD,oCAcC"}

|

||||

7

node_modules/@actions/core/lib/oidc-utils.d.ts

generated

vendored

Normal file

7

node_modules/@actions/core/lib/oidc-utils.d.ts

generated

vendored

Normal file

|

|

@ -0,0 +1,7 @@

|

|||

export declare class OidcClient {

|

||||

private static createHttpClient;

|

||||

private static getRequestToken;

|

||||

private static getIDTokenUrl;

|

||||

private static getCall;

|

||||

static getIDToken(audience?: string): Promise<string>;

|

||||

}

|

||||

77

node_modules/@actions/core/lib/oidc-utils.js

generated

vendored

Normal file

77

node_modules/@actions/core/lib/oidc-utils.js

generated

vendored

Normal file

|

|

@ -0,0 +1,77 @@

|

|||

"use strict";

|

||||

var __awaiter = (this && this.__awaiter) || function (thisArg, _arguments, P, generator) {

|

||||

function adopt(value) { return value instanceof P ? value : new P(function (resolve) { resolve(value); }); }

|

||||

return new (P || (P = Promise))(function (resolve, reject) {

|

||||

function fulfilled(value) { try { step(generator.next(value)); } catch (e) { reject(e); } }

|

||||

function rejected(value) { try { step(generator["throw"](value)); } catch (e) { reject(e); } }

|

||||

function step(result) { result.done ? resolve(result.value) : adopt(result.value).then(fulfilled, rejected); }

|

||||

step((generator = generator.apply(thisArg, _arguments || [])).next());

|

||||

});

|

||||

};

|

||||

Object.defineProperty(exports, "__esModule", { value: true });

|

||||

exports.OidcClient = void 0;

|

||||

const http_client_1 = require("@actions/http-client");

|

||||

const auth_1 = require("@actions/http-client/auth");

|

||||

const core_1 = require("./core");

|

||||

class OidcClient {

|

||||

static createHttpClient(allowRetry = true, maxRetry = 10) {

|

||||

const requestOptions = {

|

||||

allowRetries: allowRetry,

|

||||

maxRetries: maxRetry

|

||||

};

|

||||

return new http_client_1.HttpClient('actions/oidc-client', [new auth_1.BearerCredentialHandler(OidcClient.getRequestToken())], requestOptions);

|

||||

}

|

||||

static getRequestToken() {

|

||||

const token = process.env['ACTIONS_ID_TOKEN_REQUEST_TOKEN'];

|

||||

if (!token) {

|

||||

throw new Error('Unable to get ACTIONS_ID_TOKEN_REQUEST_TOKEN env variable');

|

||||

}

|

||||

return token;

|

||||

}

|

||||

static getIDTokenUrl() {

|

||||

const runtimeUrl = process.env['ACTIONS_ID_TOKEN_REQUEST_URL'];

|

||||

if (!runtimeUrl) {

|

||||

throw new Error('Unable to get ACTIONS_ID_TOKEN_REQUEST_URL env variable');

|

||||

}

|

||||

return runtimeUrl;

|

||||

}

|

||||

static getCall(id_token_url) {

|

||||

var _a;

|

||||

return __awaiter(this, void 0, void 0, function* () {

|

||||

const httpclient = OidcClient.createHttpClient();

|

||||

const res = yield httpclient

|

||||

.getJson(id_token_url)

|

||||

.catch(error => {

|

||||

throw new Error(`Failed to get ID Token. \n

|

||||

Error Code : ${error.statusCode}\n

|

||||

Error Message: ${error.result.message}`);

|

||||

});

|

||||

const id_token = (_a = res.result) === null || _a === void 0 ? void 0 : _a.value;

|

||||

if (!id_token) {

|

||||

throw new Error('Response json body do not have ID Token field');

|

||||

}

|

||||

return id_token;

|

||||

});

|

||||

}

|

||||

static getIDToken(audience) {

|

||||

return __awaiter(this, void 0, void 0, function* () {

|

||||

try {

|

||||

// New ID Token is requested from action service

|

||||

let id_token_url = OidcClient.getIDTokenUrl();

|

||||

if (audience) {

|

||||

const encodedAudience = encodeURIComponent(audience);

|

||||

id_token_url = `${id_token_url}&audience=${encodedAudience}`;

|

||||

}

|

||||

core_1.debug(`ID token url is ${id_token_url}`);

|

||||

const id_token = yield OidcClient.getCall(id_token_url);

|

||||

core_1.setSecret(id_token);

|

||||

return id_token;

|

||||

}

|

||||

catch (error) {

|

||||

throw new Error(`Error message: ${error.message}`);

|

||||

}

|

||||

});

|

||||

}

|

||||

}

|

||||

exports.OidcClient = OidcClient;

|

||||

//# sourceMappingURL=oidc-utils.js.map

|

||||

1

node_modules/@actions/core/lib/oidc-utils.js.map

generated

vendored

Normal file

1

node_modules/@actions/core/lib/oidc-utils.js.map

generated

vendored

Normal file

|

|

@ -0,0 +1 @@

|

|||

{"version":3,"file":"oidc-utils.js","sourceRoot":"","sources":["../src/oidc-utils.ts"],"names":[],"mappings":";;;;;;;;;;;;AAGA,sDAA+C;AAC/C,oDAAiE;AACjE,iCAAuC;AAKvC,MAAa,UAAU;IACb,MAAM,CAAC,gBAAgB,CAC7B,UAAU,GAAG,IAAI,EACjB,QAAQ,GAAG,EAAE;QAEb,MAAM,cAAc,GAAoB;YACtC,YAAY,EAAE,UAAU;YACxB,UAAU,EAAE,QAAQ;SACrB,CAAA;QAED,OAAO,IAAI,wBAAU,CACnB,qBAAqB,EACrB,CAAC,IAAI,8BAAuB,CAAC,UAAU,CAAC,eAAe,EAAE,CAAC,CAAC,EAC3D,cAAc,CACf,CAAA;IACH,CAAC;IAEO,MAAM,CAAC,eAAe;QAC5B,MAAM,KAAK,GAAG,OAAO,CAAC,GAAG,CAAC,gCAAgC,CAAC,CAAA;QAC3D,IAAI,CAAC,KAAK,EAAE;YACV,MAAM,IAAI,KAAK,CACb,2DAA2D,CAC5D,CAAA;SACF;QACD,OAAO,KAAK,CAAA;IACd,CAAC;IAEO,MAAM,CAAC,aAAa;QAC1B,MAAM,UAAU,GAAG,OAAO,CAAC,GAAG,CAAC,8BAA8B,CAAC,CAAA;QAC9D,IAAI,CAAC,UAAU,EAAE;YACf,MAAM,IAAI,KAAK,CAAC,yDAAyD,CAAC,CAAA;SAC3E;QACD,OAAO,UAAU,CAAA;IACnB,CAAC;IAEO,MAAM,CAAO,OAAO,CAAC,YAAoB;;;YAC/C,MAAM,UAAU,GAAG,UAAU,CAAC,gBAAgB,EAAE,CAAA;YAEhD,MAAM,GAAG,GAAG,MAAM,UAAU;iBACzB,OAAO,CAAgB,YAAY,CAAC;iBACpC,KAAK,CAAC,KAAK,CAAC,EAAE;gBACb,MAAM,IAAI,KAAK,CACb;uBACa,KAAK,CAAC,UAAU;yBACd,KAAK,CAAC,MAAM,CAAC,OAAO,EAAE,CACtC,CAAA;YACH,CAAC,CAAC,CAAA;YAEJ,MAAM,QAAQ,SAAG,GAAG,CAAC,MAAM,0CAAE,KAAK,CAAA;YAClC,IAAI,CAAC,QAAQ,EAAE;gBACb,MAAM,IAAI,KAAK,CAAC,+CAA+C,CAAC,CAAA;aACjE;YACD,OAAO,QAAQ,CAAA;;KAChB;IAED,MAAM,CAAO,UAAU,CAAC,QAAiB;;YACvC,IAAI;gBACF,gDAAgD;gBAChD,IAAI,YAAY,GAAW,UAAU,CAAC,aAAa,EAAE,CAAA;gBACrD,IAAI,QAAQ,EAAE;oBACZ,MAAM,eAAe,GAAG,kBAAkB,CAAC,QAAQ,CAAC,CAAA;oBACpD,YAAY,GAAG,GAAG,YAAY,aAAa,eAAe,EAAE,CAAA;iBAC7D;gBAED,YAAK,CAAC,mBAAmB,YAAY,EAAE,CAAC,CAAA;gBAExC,MAAM,QAAQ,GAAG,MAAM,UAAU,CAAC,OAAO,CAAC,YAAY,CAAC,CAAA;gBACvD,gBAAS,CAAC,QAAQ,CAAC,CAAA;gBACnB,OAAO,QAAQ,CAAA;aAChB;YAAC,OAAO,KAAK,EAAE;gBACd,MAAM,IAAI,KAAK,CAAC,kBAAkB,KAAK,CAAC,OAAO,EAAE,CAAC,CAAA;aACnD;QACH,CAAC;KAAA;CACF;AAzED,gCAyEC"}

|

||||

14

node_modules/@actions/core/lib/utils.d.ts

generated

vendored

Normal file

14

node_modules/@actions/core/lib/utils.d.ts

generated

vendored

Normal file

|

|

@ -0,0 +1,14 @@

|

|||

import { AnnotationProperties } from './core';

|

||||

import { CommandProperties } from './command';

|

||||

/**

|

||||

* Sanitizes an input into a string so it can be passed into issueCommand safely

|

||||

* @param input input to sanitize into a string

|

||||

*/

|

||||

export declare function toCommandValue(input: any): string;

|

||||

/**

|

||||

*

|

||||

* @param annotationProperties

|

||||

* @returns The command properties to send with the actual annotation command

|

||||

* See IssueCommandProperties: https://github.com/actions/runner/blob/main/src/Runner.Worker/ActionCommandManager.cs#L646

|

||||

*/

|

||||

export declare function toCommandProperties(annotationProperties: AnnotationProperties): CommandProperties;

|

||||

40

node_modules/@actions/core/lib/utils.js

generated

vendored

Normal file

40

node_modules/@actions/core/lib/utils.js

generated

vendored

Normal file

|

|

@ -0,0 +1,40 @@

|

|||

"use strict";

|

||||

// We use any as a valid input type

|

||||

/* eslint-disable @typescript-eslint/no-explicit-any */

|

||||

Object.defineProperty(exports, "__esModule", { value: true });

|

||||

exports.toCommandProperties = exports.toCommandValue = void 0;

|

||||

/**

|

||||

* Sanitizes an input into a string so it can be passed into issueCommand safely

|

||||

* @param input input to sanitize into a string

|

||||

*/

|

||||

function toCommandValue(input) {

|

||||

if (input === null || input === undefined) {

|

||||

return '';

|

||||

}

|

||||

else if (typeof input === 'string' || input instanceof String) {

|

||||

return input;

|

||||

}

|

||||

return JSON.stringify(input);

|

||||

}

|

||||

exports.toCommandValue = toCommandValue;

|

||||

/**

|

||||

*

|

||||

* @param annotationProperties

|

||||

* @returns The command properties to send with the actual annotation command

|

||||

* See IssueCommandProperties: https://github.com/actions/runner/blob/main/src/Runner.Worker/ActionCommandManager.cs#L646

|

||||

*/

|

||||

function toCommandProperties(annotationProperties) {

|

||||

if (!Object.keys(annotationProperties).length) {

|

||||

return {};

|

||||

}

|

||||

return {

|

||||

title: annotationProperties.title,

|

||||

file: annotationProperties.file,

|

||||

line: annotationProperties.startLine,

|

||||

endLine: annotationProperties.endLine,

|

||||

col: annotationProperties.startColumn,

|

||||

endColumn: annotationProperties.endColumn

|

||||

};

|

||||

}

|

||||

exports.toCommandProperties = toCommandProperties;

|

||||

//# sourceMappingURL=utils.js.map

|

||||

1

node_modules/@actions/core/lib/utils.js.map

generated

vendored

Normal file

1

node_modules/@actions/core/lib/utils.js.map

generated

vendored

Normal file

|

|

@ -0,0 +1 @@

|

|||

{"version":3,"file":"utils.js","sourceRoot":"","sources":["../src/utils.ts"],"names":[],"mappings":";AAAA,mCAAmC;AACnC,uDAAuD;;;AAKvD;;;GAGG;AACH,SAAgB,cAAc,CAAC,KAAU;IACvC,IAAI,KAAK,KAAK,IAAI,IAAI,KAAK,KAAK,SAAS,EAAE;QACzC,OAAO,EAAE,CAAA;KACV;SAAM,IAAI,OAAO,KAAK,KAAK,QAAQ,IAAI,KAAK,YAAY,MAAM,EAAE;QAC/D,OAAO,KAAe,CAAA;KACvB;IACD,OAAO,IAAI,CAAC,SAAS,CAAC,KAAK,CAAC,CAAA;AAC9B,CAAC;AAPD,wCAOC;AAED;;;;;GAKG;AACH,SAAgB,mBAAmB,CACjC,oBAA0C;IAE1C,IAAI,CAAC,MAAM,CAAC,IAAI,CAAC,oBAAoB,CAAC,CAAC,MAAM,EAAE;QAC7C,OAAO,EAAE,CAAA;KACV;IAED,OAAO;QACL,KAAK,EAAE,oBAAoB,CAAC,KAAK;QACjC,IAAI,EAAE,oBAAoB,CAAC,IAAI;QAC/B,IAAI,EAAE,oBAAoB,CAAC,SAAS;QACpC,OAAO,EAAE,oBAAoB,CAAC,OAAO;QACrC,GAAG,EAAE,oBAAoB,CAAC,WAAW;QACrC,SAAS,EAAE,oBAAoB,CAAC,SAAS;KAC1C,CAAA;AACH,CAAC;AAfD,kDAeC"}

|

||||

44

node_modules/@actions/core/package.json